This is a compilation of 5 most common mistakes I have experienced during my career as a Platform Engineer, while conducting multiple workshops for many teams with various experience levels, deploying clusters for multiple environments and creating namespaces for different purposes.

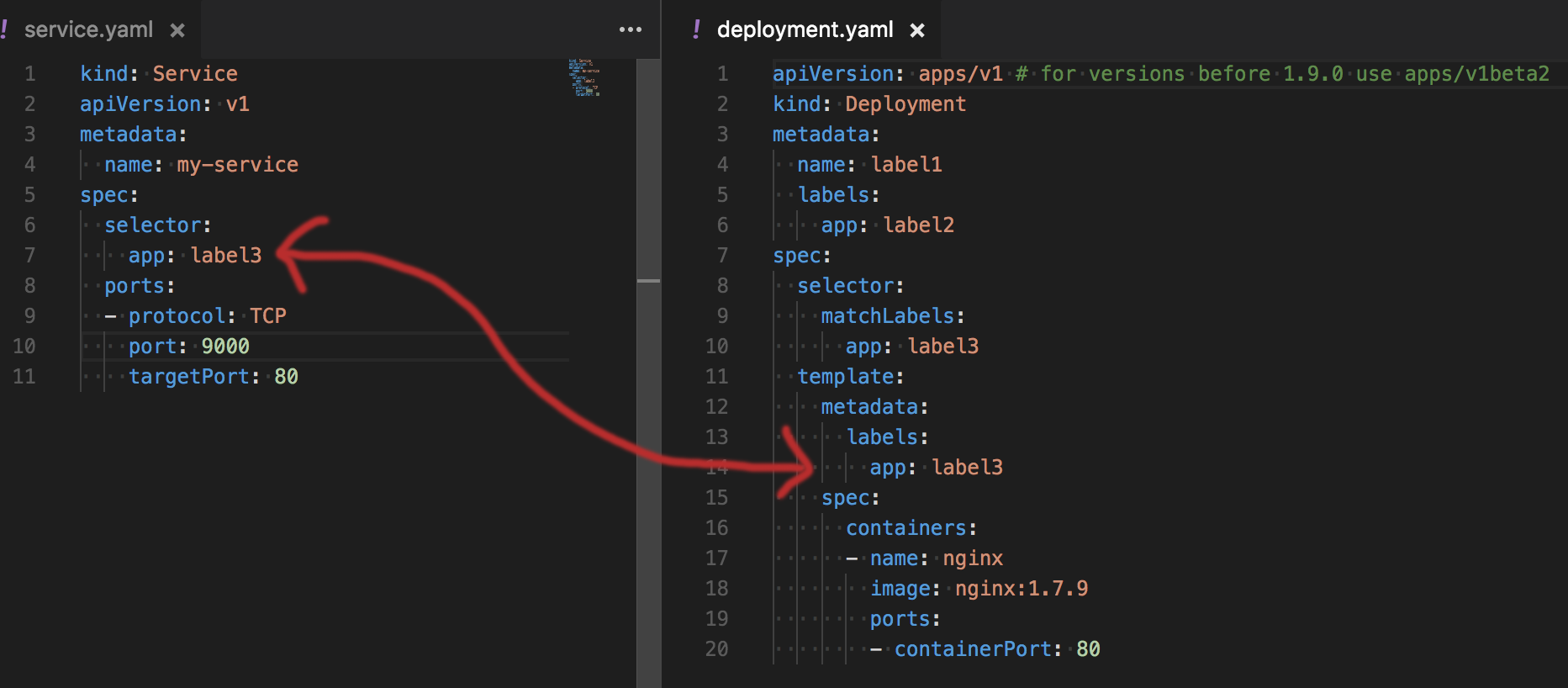

1. Service’s selector does not match Deployment’s label

There are many objects, even within single yaml file that have labels annotation. This is often confusing when you want to expose a Service for one of your Deployments. The picture below shows which label property should match selector in your service. It becomes much clearer once you realise that you can treat service as a load balancer that routes traffic to all containers that match label selector. Remember also that by default the traffic is routed randomly to one of the pods with that label.

2. Running commands without specifying a namespace

While conducting workshops for beginners I have noticed that most of the time, kubectl commands did not work as expected. Usually it was the namespace that was missing from the command. Therefore one of the first things I teach my students is: make sure you always specify a namespace before running any command. If you don’t specify a namespace, your deployment, service or an ingress will land in default namespace, which is usually not what you wanted to do in the first place.

Example:

Instead of:

kubectl apply -f deployment.yaml

Run:

kubectl apply -f deployment.yaml --namespace test

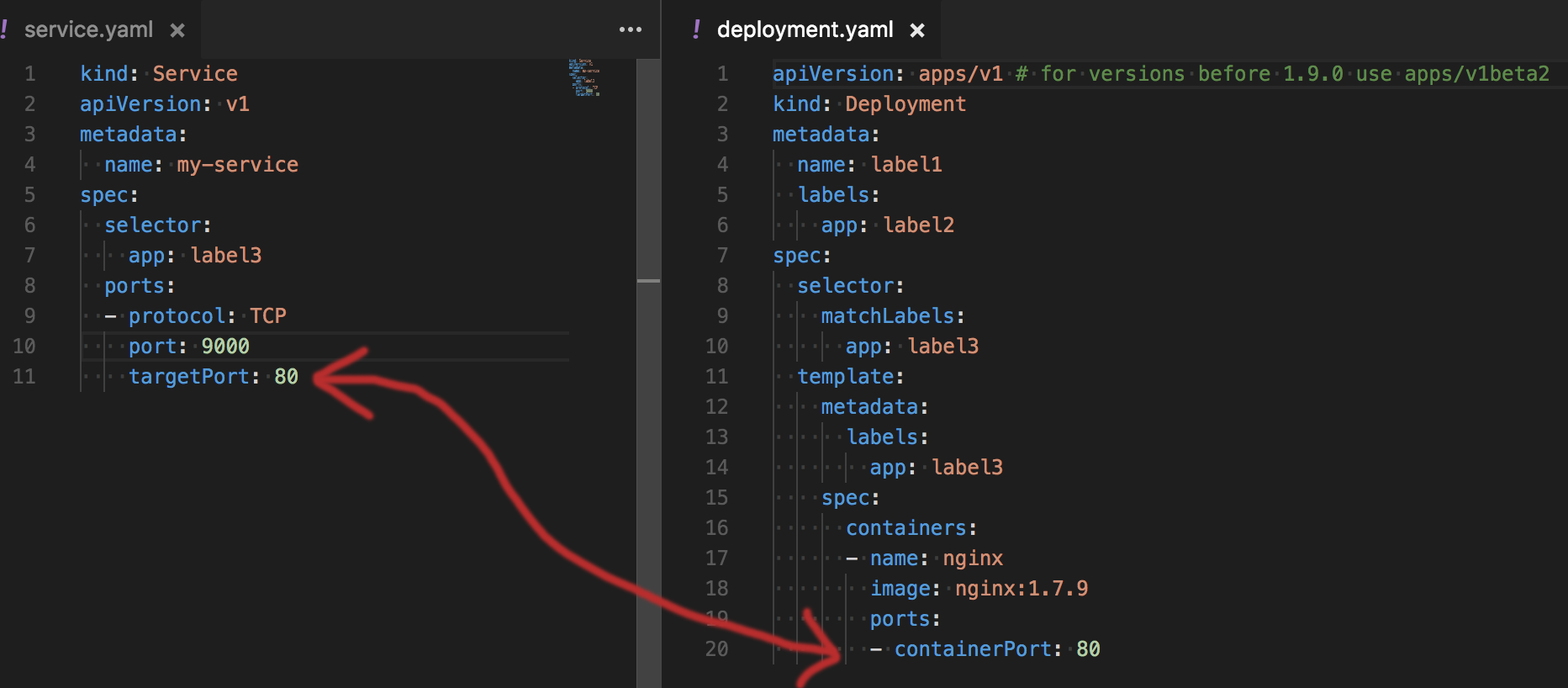

3. Wrong container port mapped to a service

Because there are two very confusing parameters available in the service: targetPort and port, it happens quite often that people mix their usage, which results in “Connection Refused” error or no reply from the container at all.

In order to avoid that mistake remember that from Service’s perspective a targetPort is the port that service uses to communicate with your pods. On the other side of the equation, the pods that want to use your service need to use another port specified in your service, it’s port 9000 in the image below.

There’s also nothing wrong with targetPort being the same as port, but you still should know which one is which.

4. Running commands against wrong cluster

When companies start to treat the cluster more seriously and you start to move away from minikube or vagrant installation into the cloud, it is often dangerous not to check which cluster is currently the “active” one in kubectl. Therefore I always check the current kubectl configuration before invoking any further commands by running:

kubectl cluster-info

and ensuring that the cluster URL is the proper one.

I also recommend keeping critical cluster configurations, such as production ones, in a separate kubeconfig file. By doing that, you minimise the chance that the configuration will be used by an accident.

If you want to use the config stored in different file than your default ~/.kube/config file, you need to specify the file location per bash session using the environment variable:

export KUBECONFIG:~/kube/production_cluster_config

After that your bash terminal session can be used for invoking kubectl commands in production.

5. Trying to use image pull secret that is not in the namespace

First thing you will do, when introducing Kubernetes to your company, is creating a private docker registry to host your images. In order to use them inside Kubernetes you need a registry secret deployed per namespace in your cluster (unless you’re using Goole Cloud for both Docker and Kubernetes).

This step is often missed when creating namespaces. You create a namespace but forget to apply the image pull secret to the namespace.

You will usually notice this after deploying new pods to the cluster and seeing errors, such as

Failed to pull image "your_company/your_image:0.1": Error: image your_company/your_image not found